Seminar 25th May 2017 3 p.m. Boldrewood campus, Boldrewood Cafe Seminar room (176/2013)

Towards billion atom simulations of magnetic materials on ARCHER

Dr Richard Evans

University of York

- Categories

- ARCHER, C++, Complex Systems, Git, HPC, Micromagnetics, Molecular Dynamics, MPI, Multi-core, NGCM, Scientific Computing, Software Engineering

- Submitter

- Hans Fangohr

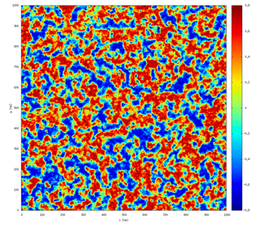

Snapshot of the z-component of the magnetisation during laser induced switching simulated using 12288 cores on ARCHER.

Magnetic materials are essential to a wide range of technologies, from data storage to cancer treatment to permanent magnets used in wind generators. New developments in magnetic materials promise huge increases in performance of devices but progress is limited by our understanding of magnetic properties at the atomic scale. Atomistic spin dynamics simulations provide a natural way to study magnetic processes on the nanoscale, treating each atom as possessing a localised spin magnetic moment. The localised nature of the spins allows the simulation of a range of complex physical phenomena such as phase transitions, laser heating, antiferromagnet dynamics in complex systems such as nanoparticles, surfaces and interfaces. However, such approaches are computationally expensive, requiring parallel computers to perform simulations of more than a few thousand atoms. The VAMPIRE code is an open source software package to perform parallel atomistic simulations of magnetic materials. In this talk I will give an overview of the recent eCSE project porting the VAMPIRE code to ARCHER, the UK national supercomputing service. During the project we implemented major changes to the data input and output routines in the code removing the previous bottlenecks of generating snapshots of the atomic scale magnetic configuration from a simulation. The new output code utilizes the full capabilities of the parallel file system on ARCHER achieving effective output bandwidths in excess of 30 GB/s. The new I/O routines now enable data output from previously unattainable simulation sizes, and we have demonstrated a large scale simulation of ultrafast heat induced switching and domain wall dynamics in a system of over 918,000,000 Fe and Gd atoms. We have found domain wall velocities in excess of 4 km/s induced by ultrafast laser heating caused by local inhomogeneities in the Fe and Gd sample.

This work was funded under the embedded CSE programme or the ARCHER UK National Supercomputing Service (http://www.archer.ac.uk).