Deep Optimisation

- Started

- 19th September 2016

- Research Team

- Jamie Caldwell

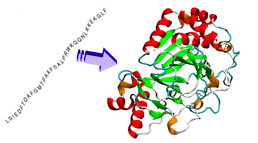

In engineering optimisation and design, the idea of breaking a problem into sub-problems, solving the sub-problems and then assembling sub-solutions together, is familiar and intuitive. However, such problem decomposition is notoriously difficult to automate because knowledge of how to decompose the problem - how to 'divide it at its natural joints' - is required. Meanwhile, deep learning techniques in neural networks, inspired by recent high-profile successes, have received a lot of attention. These are neural network methods that build hierarchical representations of a problem domain in a distributed but hierarchical manner. A key innovation is to achieve this in a layer-by-layer bottom-up manner. Deep Optimisation is a new technique that aims to achieve automated problem decomposition by bringing deep learning and distributed optimisation processes together. The aim is to develop and test algorithms based on this new approach using GPU programming on large scale distributed compute clusters. These will be applied to the protein structure prediction problem and compared with existing state-of-the-art methods.

Categories

Life sciences simulation: Bioinformatics

Algorithms and computational methods: Artificial Neural Networks, Machine learning, Multi-core, Optimisation, statistical analysis, Support Vector Machine

Programming languages and libraries: C++, CUDA, CUDA Fortran, GPU-libs, MPI, OpenMP

Computational platforms: ARCHER, GPU, HECToR

Transdisciplinary tags: Computer Science, HPC, NGCM, Scientific Computing