Towards design patterns for robot swarms

- Research Team

- Lenka Pitonakova

- Investigators

- Richard Crowder, Seth Bullock

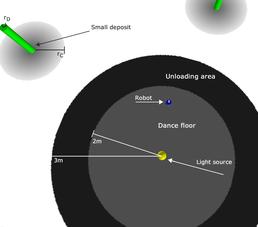

Screenshot of the simulation environment - a robot is placed in the 'base' surrounded by task deposits. Each deposit has a colour gradient around it to guide nearby robots towards it. In our experiments, we usually use 25 or more robots.

Swarm robotics is an inter-disciplinary field that seeks to design the behaviour of robots that can cooperate effectively on tasks like search and retrieval, reconnaissance, construction, etc. Unlike conventional engineering, swarm engineering requires a "bottom-up" approach since collective intelligence manifests itself when complex behaviour emerges at the global level of the swarm as a result of interactions between numerous limited individuals. In this project, we are aiming towards a theoretical understanding of swarm intelligence and the development of design patterns for effective robot swarms.

While many swarm robotics experiments have been performed both in simulation and in the real world, a framework that organises and explains these results is still missing. Robot swarms are usually designed and optimised for specific tasks and it is not clear which design decisions can be carried over to other tasks or why swarms designed in a particular way exhibit the behaviour that they do. A set of design patterns, that could take into account environmental conditions such as task distribution and dynamics, and tell us what type of behaviour robots should have in order to achieve effective performance as a collective, would be invaluable. We have shown that considering information flow, i.e., the way information is scouted for and passed between robots, as well as costs associated with converting that information to work performance, is a useful approach for understanding what types of behavioural strategies are suitable in different environments and why. Our preliminary findings can be applied to domains such as collective foraging, preferential completion of tasks based on priority and adaptive long-term division of labour in dynamic environments.

Because the translation from individual to collective behaviour is highly non-linear, the dynamics of swarms are mathematically intractable and agent-based simulations are required. During our work, we rely on the Iridis cluster extensively to run experiments with varying robot parameters and environmental conditions. The role of the supercomputer is invaluable - each experiment has to be repeated tens of times in order avoid any bias caused by stochasticity in the environment or the robots themselves. Moreover, data usually needs to be analysed over a large parameter space. Typically, hundreds or even thousands of runs are required in order to understand the importance of a single parameter - a task that is achievable on Iridis within hours, but would take weeks on a personal computer and an infeasible amount of time with a population of real robots.

Categories

Life sciences simulation: Swarm Behaviour

Physical Systems and Engineering simulation: Robotics

Algorithms and computational methods: Agents

Visualisation and data handling software: Pylab

Software Engineering Tools: Eclipse, Git

Programming languages and libraries: C++, Python

Computational platforms: Linux, Mac OS X

Transdisciplinary tags: Complex Systems